Hi Labelbox Community! ![]()

This guide demonstrates how to run a comparative evaluation between YOLOv11, the latest high-efficiency release from Ultralytics— YOLOv26 models.

By comparing their performance metrics (Confidence vs. Speed) on your actual data, you can automatically upload the superior model’s predictions to Labelbox as pre-labels.

We run a “head-to-head” evaluation on your live Labelbox data to measure:

-

Mean Confidence: How certain is each model version about its detections?

-

Efficiency: What is the real-world latency difference between the refined YOLOv11 and the newer YOLOv26 architectures?

The “Champion” model is then used to populate your project. This allows your team to simply review and adjust boxes rather than drawing them

from scratch, drastically reducing labeling time and costs. This also helps if you want to have multiple workflows in your project

More about how to set up workflows based on filters (consensus agreement, features, labeling & reviewing time, etc) here.

1. Prerequisites

Before you begin, ensure you have your Labelbox API key and the necessary Python libraries installed.

Install dependencies

%pip install ultralytics torch torchvision pillow numpy tqdm labelbox requests -q

You will need:

-

Labelbox API Key: Found under Settings > Workspace > API Keys.

-

Project ID & Dataset ID: Available in the URLs of your respective Labelbox Project and Dataset

-

Model Weights: Custom .pt files (e.g., yolo11n.pt, yolo26n.pt, and yolo26s.pt).

2. The Workflow Logic

The following script follows these steps:

-

Initialize: Loads both YOLOv26 and YOLOv11 models.

-

Batching: Sends images from your Labelbox Dataset to a specific Project using project.create_batch().

-

Dual Inference: Runs both models on every image to collect average confidence and inference speed.

-

Selection: Compares the “Mean Confidence” of both models.

-

MAL Upload: Uploads the predictions of the higher-confidence model to the Labelbox editor.

3. The Python Implementation

A few pointers to keep in mind -

-

The max_images is set here as 3 but it can be changed depending on your dataset size.

-

Make sure you set up the ontology tools exactly as defined under “ontology_mapping”.

Implementation Script

import os import time import uuid import requests import numpy as np from io import BytesIO from pathlib import Path from PIL import Image from typing import List, Dict, Any, Optional, Tuple from ultralytics import YOLO from tqdm import tqdm import labelbox as lb import labelbox.data.annotation_types as lb_annotation_types import labelbox.types as lb_types from labelbox import MALPredictionImport class YOLOChampionEvaluator: def __init__(self, model_paths: List[str] = ['yolo11n.pt', 'yolo26n.pt', 'yolo26s.pt'], api_key: Optional[str] = None, dataset_id: Optional[str] = None, project_id: Optional[str] = None, max_images: Optional[int] = None, conf_threshold: float = 0.25): # Load all models into a dictionary self.models = {} for path in model_paths: print(f"Loading Model: {path}...") self.models[path] = YOLO(path) self.api_key = api_key or os.getenv('LABELBOX_API_KEY') self.dataset_id = dataset_id self.project_id = project_id self.max_images = max_images self.conf_threshold = conf_threshold if not self.api_key: raise ValueError("Labelbox API key is required.") self.client = lb.Client(api_key=self.api_key) self.dataset = self.client.get_dataset(self.dataset_id) self.project = self.client.get_project(self.project_id) def run_inference(self, image: Image.Image, model: YOLO) -> Tuple[Dict[str, Any], float]: start_time = time.time() results = model.predict(source=image, imgsz=640, verbose=False, conf=self.conf_threshold)[0] inference_time = round(time.time() - start_time, 3) detections = [] confidences = [] if results.boxes is not None: boxes = results.boxes.xyxy.cpu().numpy() confs = results.boxes.conf.cpu().numpy() class_ids = results.boxes.cls.cpu().numpy().astype(int) for i in range(len(boxes)): confidences.append(float(confs[i])) detections.append({ 'class_name': results.names[int(class_ids[i])], 'confidence': float(confs[i]), 'bbox': [float(boxes[i][0]), float(boxes[i][1]), float(boxes[i][2]), float(boxes[i][3])] }) avg_conf = np.mean(confidences) if confidences else 0.0 return {'detections': detections, 'num_detections': len(detections), 'avg_conf': avg_conf}, inference_time def process_and_upload_best(self, ontology_mapping: Dict[str, str]): # 1. Get Data Rows and Create Batch all_data_rows = list(self.dataset.data_rows()) data_rows = all_data_rows[:self.max_images] if self.max_images else all_data_rows batch_name = f"Multi_YOLO_Eval_{uuid.uuid4().hex[:5]}" print(f"Creating Batch '{batch_name}' with {len(data_rows)} images...") self.project.create_batch(name=batch_name, data_rows=data_rows, priority=5) # 2. Multi-Inference Loop # Store results as: { model_name: [list_of_results] } all_model_results = {name: [] for name in self.models.keys()} for dr in tqdm(data_rows, desc="Evaluating Models"): response = requests.get(dr.row_data) img = Image.open(BytesIO(response.content)).convert('RGB') for name, model in self.models.items(): res, t = self.run_inference(img, model) all_model_results[name].append({'data_row': dr, 'results': res, 'time': t}) # 3. Decision Engine: Compare Model Performance (Based on Avg Confidence) scores = {} for name, results in all_model_results.items(): scores[name] = np.mean([r['results']['avg_conf'] for r in results]) # Determine the winner winner_name = max(scores, key=scores.get) winner_results = all_model_results[winner_name] self._display_comparison(all_model_results, winner_name) # 4. Prepare MAL with the Champion Model print(f"Preparing MAL predictions using champion: {winner_name}...") predictions = [] for res in winner_results: dr = res['data_row'] annotations = [] for det in res['results']['detections']: if det['class_name'] in ontology_mapping: annotations.append(lb_annotation_types.ObjectAnnotation( name=ontology_mapping[det['class_name']], value=lb_annotation_types.Rectangle( start=lb_annotation_types.Point(x=det['bbox'][0], y=det['bbox'][1]), end=lb_annotation_types.Point(x=det['bbox'][2], y=det['bbox'][3]) ) )) if annotations: predictions.append(lb_types.Label(data={"global_key": dr.global_key}, annotations=annotations)) # 5. Upload Winning Predictions if predictions: import_name = f"Winner_{winner_name.split('.')[0]}_{uuid.uuid4().hex[:5]}" print(f"Uploading {len(predictions)} labels from {winner_name}...") job = MALPredictionImport.create_from_objects( client=self.client, project_id=self.project_id, name=import_name, predictions=predictions ) job.wait_till_done() print(f"MAL Upload Successful. Champion: {winner_name}") def _display_comparison(self, all_results: Dict[str, List[Dict]], winner: str): print("\n" + "="*85) print(f"{'MULTI-MODEL EVALUATION SUMMARY':^85}") print("="*85) header = f"{'Metric':<25}" for name in all_results.keys(): header += f" | {name:<15}" print(header) print("-" * 85) # Row data storage times, confs, detections = "Avg Inference (sec) ", "Avg Confidence ", "Total Detections " for name, res_list in all_results.items(): avg_time = np.mean([r['time'] for r in res_list]) avg_conf = np.mean([r['results']['avg_conf'] for r in res_list]) total_det = np.sum([r['results']['num_detections'] for r in res_list]) times += f" | {avg_time:<15.3f}" confs += f" | {avg_conf:<15.3f}" detections += f" | {total_det:<15}" print(times) print(confs) print(detections) print("-" * 85) print(f" SELECTED CHAMPION: {winner}") print("="*85 + "\n") def main(): API_KEY = "" PROJECT_ID = "" DATASET_ID = "" # Now passing a list including YOLO11 evaluator = YOLOChampionEvaluator( model_paths=['yolo11n.pt', 'yolo26n.pt', 'yolo26s.pt'], api_key=API_KEY, dataset_id=DATASET_ID, project_id=PROJECT_ID, max_images=3 ) #add more ontology mapping based on your data ontology_mapping = { "person": "Person", "car": "Car", "bus": "Bus", "truck": "Truck", "dog": "Dog", "cat": "Cat" } evaluator.process_and_upload_best(ontology_mapping) if __name__ == "__main__": main()

4. Result Format

After execution, you will see a technical log similar to this:

Results Log

===============================================================================

MULTI-MODEL EVALUATION SUMMARY

===============================================================================

Metric | yolo11n.pt | yolo26n.pt | yolo26s.pt

-------------------------------------------------------------------------------

Avg Inference (sec) | 0.344 | 0.165 | 0.420

Avg Confidence | 0.764 | 0.807 | 0.788

Total Detections | 8 | 8 | 9

-------------------------------------------------------------------------------

SELECTED CHAMPION: yolo26n.pt

===============================================================================

-

Inference: Useful for understanding real-time performance.

-

Confidence: Our primary driver for “Winner” selection. High confidence usually correlates with higher precision.

-

Detections: A high detection count on the slower model compared to the fast model might indicate better recall (finding more objects).

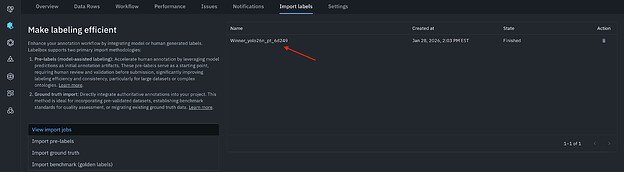

4. Visualization in the Labelbox Editor

You can see the imports of your projects by going into the specific project → ‘Import labels’ → ‘view import jobs’

You can read more about pre-labels in our docs!